Identifying human factors as a source of error in laboratory quality control

Background

Statistical process control (SPC) is a key component of medical laboratory practice. It is a requirement in many countries and is mandated in the International Organization for Standardization (ISO) 15189 Standard. However, there have been several surveys that indicate that quality control (QC) processes often are not followed (1-3). The question arises as to why this is the case.

When we describe a QC system, we need to discuss the following components.

- An understanding of error and the type of error.

- To design a system to detect an error you must define what types of error you believe may exist. We assume that the analytical system will have two types of errors. A shift in accuracy (introduction of bias) or systematic error and a change in the precision of the assay or random error. The latter may be caused by reagent or calibrator changes, instrument changes, or operator errors.

- A method of testing the analytical process.

- In the conventional QC model, this is usually achieved by running QC samples at defined frequencies and evaluating their results against a mean and standard deviation (SD) obtained from repeated measurements carried out on these samples.

- Rules to be used to assess if the analytical system is in control.

- These are normally based on the assumption that the QC samples results should follow a Gaussian distribution if the analytical process is in control. Some of the assumptions regarding the suitability (e.g., commutability of the specimen matrix, Gaussian distribution of results) of the QC sample have been challenged.

- A process of troubleshooting and returning the analytical system to be in control

- This process should involve at least, checking conditions of all contributing reagents and calibrators, confirming currency of instrument maintenance schedules, examining for changes in instrument operation, and finally, where warranted, rerunning the QC material to ensure it is back in control.

- A documented process to rerun and assess patient results that may have been affected by the error.

- There are a range of protocols that can be used to identify patient specimens where a clinically significant error has been introduced.

- A documented system to notify clinicians of any amended result that may impact a patient’s diagnosis or treatment.

- The whole purpose of QC systems is to protect patients from incorrect results, so the core of the system is ensuring this does not occur.

The scope and application of QC rules including the determination of the optimal frequency to run QC samples have become quite complex (4).

It is generally accepted that there have been significant improvements in laboratory automation over the last few decades. Despite this, there has been little improvement in the understanding and implementation of QC. This article will argue that the reason behind this lack of progress is dependent on a limited number of human factors.

Complexity

The original QC rules were based on the mean plus or minus either two (as a warning) or three SDs (action limit). In the survey by Howanitz et al. (5) laboratories reported using up to 15 different QC rules while over 40% of participants reported using more than one rule. Many participants even employed analyte-specific rules.

However, the most recent survey of some US laboratories demonstrates that most sites use only the simplest rules (mean ± 2SD) and that QC samples are run just once a day or once a shift, and often these controls are run at the start of the analytical run (2,3). The effectiveness of conventional QC depends on the frequency of QC samples and the fact that labs persist in running just one per shift reflects the low likelihood of detection of an analytical error.

Most QC flags (60%) do not repeat whenever the QC sample is repeated (1,2) suggesting that the error flag is likely caused by the QC sample rather than the analytical process being out of control. Indeed, most laboratories only see a QC flag less than once a week (3). Laboratory staff, therefore, become desensitised to QC flags since the number of expected QC flags far outweighs the number of QC failures.

Fundamentally, laboratories see QC as a regulatory requirement rather than an integral component of their responsibility to a patient safety process (1,3).

Training/qualifications of staff

At the same time trained staff have become more difficult to recruit, qualifications of laboratory personnel in the United States performing these measurements have decreased, and personnel is no longer expected to have the level of training that once was required (5). Moreover, in recent years, laboratory instrumentation has become more complex, amidst evidence that there are declining levels of adequate QC policy in laboratories and inadequate and/or inconsistent application of QC policy by supervisory and/or testing personnel (1,5). The impact of in-house training compared to training by an external provider has been documented (6) and shows that in laboratories with new equipment where training on that equipment is provided by the manufacturers, staff performed better than in laboratories that relied on in-house training. This suggests that in-house trainers take shortcuts and do not train as effectively as external expert trainers.

Human factors in laboratory error

These surveys describe significant problems in the effectiveness of current approaches to SPC using the conventional QC approach, thus despite the long history of SPC, it is still poorly understood and very poorly implemented. This seems to be due to several technical and human issues. The technical issues related to the QC material and the setting of relevant QC limits. The human factors are due to the complexity of the task and the limitations of humans including psychophysical (observational skill, experience), organisational (training, scope of decision making, feedback, precise instructions), workplace environment (noise, work time, workstation organisation) and social (team communication, pressure, isolation). These errors have been classified by the International Union for Pure and Applied Chemistry (IUPAC) for the analytical laboratory (7). They include commission errors [mistakes and violations (of protocols)] and omission errors (lapses and slips). Further, the authors provide examples of these types of errors in some common quantitative processes and use a statistical model (Monte Carlo simulation) to estimate the impact of these errors on common laboratory processes and the impact on measurement uncertainty.

Thirty years ago, a survey of published work on human factors disclosed that the estimated contribution of human error to accidents in hazardous technologies increased fourfold between the 1960s and 1990s, from minima of around 20% to maxima of beyond 90%. The most likely explanation is that equipment has become more reliable and that accident investigators have become more aware that safety-critical errors are not restricted to the process floor. Human error is defined as any significant deviation from a previously established, required, or expected standard of performance (8). How great then is the contribution of human factors to laboratory QC failure? There is little direct evidence, however, there have been some significant errors reported during the covid pandemic where the pressure of workload probably led to an error that impacted patients.

In a paper from 1995, Reason classified the causes of human error in several ways based on different underlying mechanisms (9). The basic distinctions are between the following:

- Slips, lapses, trips, and fumbles (execution failures) and mistakes (planning or problem-solving failures). Slips relate to observable actions and are associated with attentional failures. Lapses are more internal events and relate to failures of memory. Slips and lapses occur during the largely automatic performance of some routine task, usually in familiar surroundings.

- Mistakes are divided into rule-based mistakes and knowledge-based mistakes. Rule-based mistakes relate to problems for which the person possesses some pre-packaged solution, while knowledge-based mistakes relate to those that result from training, experience, or the availability of procedures—not necessarily those resulting from the organisation’s current policies.

- Errors (information-handling problems) and violations (motivational problems). Violations are deviations from safe operating practices, procedures, standards, or rules. Usually, these are conscious violations where the deviation was deliberate.

- Active versus latent failures. Active failures are committed by those in direct contact with the client, latent failures arise in organisational and managerial spheres and their adverse effects may take a long time to become evident. Active failures are unsafe acts committed by those on the process line. Latent failures are created as the result of decisions, taken at the higher echelons of an organisation.

The sum of these human error types might best be considered within what is currently known about the mechanism with which the conscious (action-driving) and the subconscious (information-gathering) parts of the human brain interact. For some time, it has been considered that the human brain receives and processes (in digital computing terms) approximately 11 million bits per second, while based on similar estimates, the conscious mind is capable of processing in the order of only 50 bits per second. The information received by the subconscious brain is a cumulation of inputs from each of the five senses (10). Human “attention” can be best described as humans processing sensory information selectively at the expense of other information to make their behaviour as good as possible (on a priority task at a given moment in time). Hence vast amounts of the sensory inputs are “filtered out” by the subconscious to present an actionable set of information to the conscious mind. This filtering results in approximately 99.9996% of visual information and 99.97% of aural information being diverted from conscious attention (10). Mistakes, errors, and failures are likely to result from this filtering in that no signal to execute an action is received by the conscious mind. Furthermore, it has been shown that an “attentional blink” occurs when individuals are required to rapidly switch between important and unimportant tasks when there is even one distraction sandwiched in time between tasks.

The greatest error-producing conditions include time shortage and poor signal-noise ratio. Recall the number of QC failures reported by laboratories (1). In a busy laboratory setting, this scenario is likely to be normal hence, the insertion of QC flags (either visual or auditory) is likely to be filtered at a subconscious level and fail to generate the necessary attention assigned to the action. In situations where even a moderate number of alerts require no action, the “attention blink” is likely to become a more frequent response. It is therefore likely that as laboratory automation expands and the number and complexity of necessary decisions needed to be made in a fixed period increase, it may challenge the neurophysiological capabilities of a human to make those necessary critical decisions associated with QC flags.

Evidence of human factors producing an error in QC

In the College of American Pathologists study reported separately by Howanitz and Steindel (1,5), survey participants were requested to provide retrospective data on each of four analytes for 6 months before the study. Participants recorded the number of patients, QC, and repeat tests, and were then asked to provide prospective out-of-control event data for each analyte over a 3-month period. The results are given in Table 1. The authors suggested that the difference between prospective and retrospective rates may relate to “how stringently laboratorians followed and recorded their policies and procedures, since it is considered that when there is oversight of a practice there is better adherence than when it is inspected retrospectively” (1).

Table 1

| Parameter measured | Cholesterol | Calcium | Digoxin | Haemoglobin | Ideal |

|---|---|---|---|---|---|

| Retrospective rejection rate | 0.36 | 0.43 | 0.43 | 0.24 | 0.54 |

| Prospective rejection rate | 0.56 | 0.58 | 0.65 | 0.36 | 0.54 |

| Percent whose policy is to repeat all patient samples | 38.8 | 39.2 | 61.6 | 30.5 | 100 |

| Percent who repeated all patient samples | 9.8 | 11.4 | 53.9 | 4.1 | 100 |

Howanitz et al. also described a lack of adherence to established laboratory procedures (5), which is of particular concern when a QC flag is detected. After troubleshooting, it is necessary that patient specimens should be repeated when analytical runs fail (11). Despite this, in the Howanitz survey, only about 33% of participants had policies to repeat cholesterol, calcium, and hemoglobin, and about 60% had policies to repeat digoxin measurements on patient specimens when QC exceptions occurred (see Table 1). Of more concern was in laboratories where a policy to repeat patient samples was documented, most of these did not follow their own policies for cholesterol, calcium, and hemoglobin, while a bare majority follow their policies for digoxin. In the survey by Westgard, over 30% of laboratories did not have reasonable repeat criteria, and nearly half of the cohort would override the out-of-control flag and report results (3). The surveys of Howanitz and Westgard also found evidence of fewer reported QC failures than would statistically be expected, suggesting that these are being missed. These represent both errors of commission (not following protocols) and of omission (lower than expected QC failure rates).

A possible solution to the impact of human errors

It is likely that the human errors that occur in QC monitoring fall into one of two categories. They are either slips and lapses by those making decisions on QC flags or latent failures made by those in senior positions who design the QC system (e.g., the low frequency of QC samples). This factor has been described as overload—the human cannot help but err because the workload far exceeds his/her capacity to handle the situation (e.g., process more workpieces in a shorter period). However, overload could equally mean the amount of information a person has to process, the working environment, or psychosocial stress).

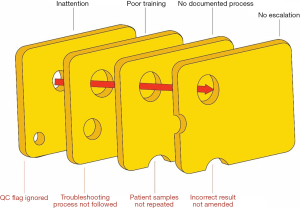

Thus, it is likely that the major errors in the QC process reported in (1,5) are human as well as system based. How can these problems be overcome? The problems are multiple, ranging from poorly designed QC systems and as described above, limitations of humans to deal effectively with repetitive, low-failure monitoring processes which, however, when significant, may have devastating consequences on patient outcomes (Figure 1). Sector-wide acceptance of lesser qualified analytical staff coupled with the use of non-standard QC rules (5) is likely to result in the frequency of these overlooked but consequential events increasing (12). Therefore, it appears prudent that thought be given urgently to effective ways to mitigate and control the impact of these event types. The issue of the design of evidence-based QC systems is critical but beyond the scope of this article (2,13,14).

The solution to reducing human factors in causes of error is to reduce human involvement in the process. As described above, the key components of QC are the detection of error (a QC sample value outside set limits), investigation of the error (using set protocol, e.g., rerun the QC sample, look for instrument flags, check for new reagent or calibrator lot), recalibrate and repeat failed patient samples according to a laboratory protocol. Most of these steps can be automated. Automated clinical decision support systems (CDSS), working in conjunction with a laboratory’s middleware, could divert patient specimens to an alternate instrument while the QC failure was investigated—assuming a track is in place; queue potentially compromised specimens to be re-run; automatically compare the initial and re-run specimen results; assess the relevance of the flag all without human intervention. Once the final assessment has been made the instrument may be cleared for further operations or placed on standby for investigation by a qualified engineer. Additionally, the CDSS could also include auto-verification and delta-checking to identify patient sample mix-ups (15). Such software could reduce the errors caused by human limitations in dealing with complex technical decision-making. Using artificial intelligence in analytical systems could replace human decision-making with a device with an uninterruptable attention span.

Laboratories are at the next phase of innovation with reducing patient error. It will be driven by middleware that continuously monitors analytical performance and some pre-analytical errors.

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was a standard submission to the journal. The article has undergone external peer review.

Peer Review File: Available at https://jlpm.amegroups.com/article/view/10.21037/jlpm-23-7/prf

Conflicts of Interest: Both authors have completed the ICMJE uniform disclosure form (available at https://jlpm.amegroups.com/article/view/10.21037/jlpm-23-7/coif). TB serves as an unpaid editorial board member of Journal of Laboratory and Precision Medicine from December 2016 to January 2025. The other author has no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Steindel SJ, Tetrault G. Quality control practices for calcium, cholesterol, digoxin, and hemoglobin: a College of American Pathologists Q-probes study in 505 hospital laboratories. Arch Pathol Lab Med 1998;122:401-8. [PubMed]

- Rosenbaum MW, Flood JG, Melanson SEF, et al. Quality Control Practices for Chemistry and Immunochemistry in a Cohort of 21 Large Academic Medical Centers. Am J Clin Pathol 2018;150:96-104. [Crossref] [PubMed]

- Westgard S. The 2017 Great Global QC Survey Results. 2017. Available online: https://www.westgard.com/great-global-qc-survey-results.htm

- Westgard S, Bayat H, Westgard JO. Mistaken assumptions drive new Six Sigma model off the road. Biochem Med (Zagreb) 2019;29:010903. [Crossref] [PubMed]

- Howanitz PJ, Tetrault GA, Steindel SJ. Clinical laboratory quality control: a costly process now out of control. Clin Chim Acta 1997;260:163-74. [Crossref] [PubMed]

- Punyalack W, Graham P, Badrick T. Finding best practice in internal quality control procedures using external quality assurance performance. Clin Chem Lab Med 2018;56:e226-8. [Crossref] [PubMed]

- Kuselman I, Pennecchi F. IUPAC/CITAC Guide: Classification, modeling and quantification of human errors in a chemical analytical laboratory (IUPAC Technical Report). Pure and Applied Chemistry 2016;88:477-515. [Crossref]

- Peters GA, Peters BJ. Human Error: Causes and Control. 2nd edition. Boca Raton: CRC Press; 2006.

- Reason J. Understanding adverse events: human factors. Qual Health Care 1995;4:80-9. [Crossref] [PubMed]

- Wiliam D. The half-second delay: What follows? Pedagogy, Culture and Society 2006;14:71-81. [Crossref]

- Jones G, Calleja J, Chesher D, et al. Collective Opinion Paper on a 2013 AACB Workshop of Experts seeking Harmonisation of Approaches to Setting a Laboratory Quality Control Policy. Clin Biochem Rev 2015;36:87-95. [PubMed]

- Hollnagel E. Reliability of cognition: foundations of human reliability analysis. London: Academic Press; 1993.

- Parvin CA. Planning Statistical Quality Control to Minimize Patient Risk: It's About Time. Clin Chem 2018;64:249-50. [Crossref] [PubMed]

- Badrick T, Loh TP. Developing an evidence-based approach to quality control. Clin Biochem 2023;114:39-42. [Crossref] [PubMed]

- Brown AS, Badrick T. The next wave of innovation in laboratory automation: systems for auto-verification, quality control and specimen quality assurance. Clin Chem Lab Med 2022;61:37-43. [Crossref] [PubMed]

Cite this article as: Badrick T, Brown AS. Identifying human factors as a source of error in laboratory quality control. J Lab Precis Med 2023;8:16.